Durable Azure Functions with PowerShell

Last time we explored the new containerized version of the Logic Apps service, so while on the topic of public previews in Azure I also wanted to explore the Durable Azure Functions, currently getting support implemented for PowerShell.

When writing this only a few of the defined typical application patterns are supported and documented, but as Microsoft’s goal is to support all Azure Functions languages I’m sure we’ll see more features implemented as they move forward with the service. An issue regarding the implementation can be tracked on GitHub, and since the entire code is open source we can both dig deeper to find out what’s actually live, as well as contribute if we’d like to!

Durable Azure Functions

While PowerShell support for Azure Functions was in preview I wrote an introductory post about them. Not much has changed when it comes to how they work, but something new for the ones built in PowerShell is Durable Functions.

What differentiates a Durable Azure Function from a normal one? I like thinking of Durable Functions as an orchestration workflow found in other services such as Azure Logic Apps, but “functionized” as code. To relate to the stateless vs stateful discussion from the last post, it’s a way to run stateful functions.

Tyler on the PowerShell team explains it concisely with some examples in a Twitter thread.

Durable Functions are Az Func response for long running funcs.

— Tyler Leonhardt #BlackLivesMatter (@TylerLeonhardt) October 23, 2018

They allow you to create an orchestrator func (workflow as code) which fires off other functions either serially (chaining), in parallel (fan out/in), etc and keep state between func calls.https://t.co/5jviO760yu

Function Types

In our normal stateless Azure Function apps all functions are of the same type. They can have different versions, triggers, bindings and they can of course be written in different languages, but they are all the same type of function. In Durable Functions however, there is more than one type of function.

Client Functions

A client function is categorized as such because of its role in a Durable Function app. The main purpose of a client function is to trigger another function called the orchestrator function, which represents our workflow. Microsoft states that any non-orchestrator function can be a client function, but in most cases the client function we use will be a normal stateless function with a special input binding, triggered from whichever source we want.

We’ll go through how to implement a client function later, but something we need to keep in mind when doing so is that the function needs information on which orchestrator function(s) to start.

Orchestrator Functions

Orchestrator functions do exactly what they sound like, they orchestrate. Typically they orchestrate other functions. They are the core of the stateful Durable Functions workflow and because of how they are written they are also the description of what that workflow looks like. They make sure that the app works in the way that we’ve designed it to, and we can design it according to application patterns that I will go into later. Because orchestrator functions are descriptions of the workflow, they need to be limited to deterministic code, code that always behaves the same no matter what input it is given or when it is run. If we need to create a random number or call an external API we should be doing it in an activity function or use a specific method of doing so instead. We can also pass input to the orchestrator function in the form of a hashtable with keys and values, which is another way to get around the limitation.

Why? Because orchestrator functions set checkpoints, save states and replay themselves for reliability.

That’s right! Every time an orchestrator function receives a response, or a durable timer runs out (we’ll get into those later too), it executes all the steps from the start to rebuild the state. It also means that any logging we write may not perform exactly as we expect it to. When it encounters a function to run, it first checks with the connected storage account for the saved history of any previous runs of the current orchestration. If it finds that it has already run that step it takes the stored result instead of running it again, and the replay continues until the entire function code has finished or until the function encounters new asynchronous work to do.

This is why the workflow must be described as deterministic and yield the same results every time it’s run! Microsoft has a good list of guidelines regarding different operations that a function might want to perform, and workarounds to avoid work that is not deterministic. We can still use PowerShell code to control the code flow using conditional statements and loops as well as error handling using try/catch/finally as needed, but keep in mind that the orchestrator’s role is to coordinate work, not to perform it.

Activity Functions

Activity functions are the basic unit of work in our Durable Function app, they are the pieces that are actually being orchestrated. The activity functions can only be triggered by the orchestrator function, and exist as implementations of the tasks that our app needs to complete. We can pass input to them, but compared to the input to an orchestrator function it’s limited to a single object or collection. Unlike the orchestrator functions, activity functions are not limited to any specific type of work that they can do. Common tasks could for example be requesting data from an external API, triggering another service such as a Logic App, or storing data for use by another system.

One main difference between activity functions and normal Azure Functions is that activity functions only guarantee at least once execution. This means that there’s a chance that the function will be triggered by the orchestrations more than once. Microsoft therefore recommends us to implement our activity function logic “idempotently” whenever possible, and I’d be lying if I said I didn’t have to look that word up.

It comes down to that the app should not break something if an activity function is run more than once, that the operation should have same effect if it’s run just once as if it’s run three times. If our activity function would be inserting data into a database we could either check for a key value to make sure that the row does not already exist, or make sure that the data storage we’re using does not accept duplicate key entries to begin with.

Something to keep in mind for activity functions is that error handling may take a central role, to ensure that operations are allowed to fail without crashing the app, assuming they fail within our control. If we’ve already entered the data into our database it may mean that the hypothetical error DuplicateRowFound from the database could be caught and discarded instead of stopping the current orchestration.

Entity Functions

Entity functions are a fourth type of function that deserves mentioning, even though they’re not currently supported in PowerShell. Entity functions are functions with a special entity trigger that are used for reading and updating pieces of state, called entities or durable entities. Entities work like tiny services that communicate via messages and they can be used to explicitly set the state of our app instead of just letting it be handled by the workflow or orchestration.

They are currently only supported for C# and JavaScript, and you can read more about them in the documentation.

Creating Durable Functions

Now that we’re up to speed on the basics for how Durable Functions work in comparison to the Azure Functions we’re used to, let’s try our hand at building them.

If you want to follow along, you will need an Azure Subscription and the following tools:

- PowerShell

- Visual Studio Code

- The Azure Functions VS Code extension

- Azure Functions Core Tools

- Optional: Azure Storage Emulator

Creating an App

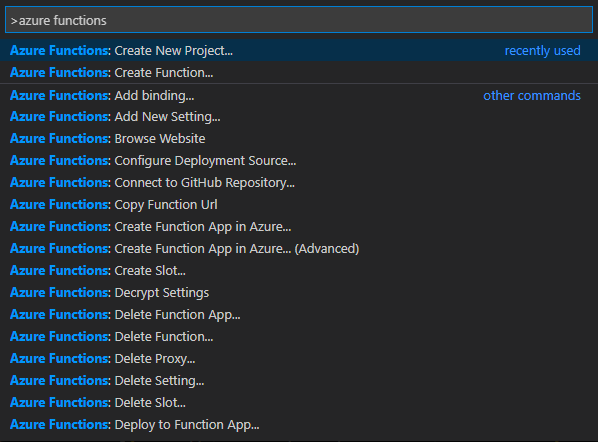

The first thing we need to do is to create the function project, which we can do using the Azure Functions extension. Bring up the command palette (default F1) and search for “Azure Functions”.

The > symbol indicates that we’re using a command, if we remove it we would instead be able to browse the files of our working directory in VS Code.

Selecting the highlighted option above and creating our project will prompt us to also create our first function in our app, which we will do using the option Durable Functions HTTP starter (preview), naming it “DurableFunctionsHttpStart”. The authorization level I chose is Function, but it won’t matter too much for the sake of this demo. If you plan on deploying the app somewhere I would suggest you to avoid Anonymous unless you absolutely need it, since it would allow anyone to call your function.

To relate back to the function types, we just created our client function to trigger our workflow orchestration. If you take a look at its function.json file you can see that we can provide it the name of the orchestrator function to start, and that it has a special input binding to support Durable Functions.

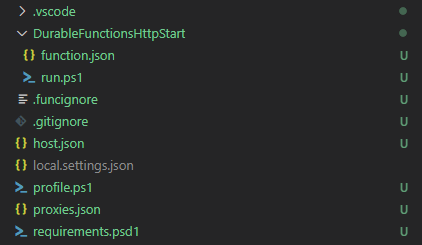

Our project is now created, and we should have a file structure looking something like this.

Before we do anything else we should open our host.json file for a quick edit. PowerShell is only supported in version 2.x of the Durable Functions, so we want to make sure our app is running the right version by updating our extension bundle. We can also take this moment to follow best practice and turn off the managed dependency setting so that our app is self-contained and does not need to install dependencies during each cold start.

Below is how my file looks after the two changes. For more information on the settings available, check out the documentation for the file.

{

"version": "2.0",

"logging": {

"applicationInsights": {

"samplingSettings": {

"isEnabled": true,

"excludedTypes": "Request"

}

}

},

"extensionBundle": {

"id": "Microsoft.Azure.Functions.ExtensionBundle",

"version": "[2.*, 3.0.0)"

},

"managedDependency": {

"enabled": false

}

}

To have a working Durable Function example app we still need two more functions, an orchestrator function and at least one activity function. Before we talk a bit more about how it all fits together, let’s create those too.

Open the command palette again, choose Azure Functions: Create function and then Durable Functions orchestrator (preview), I’m naming mine “DurableFunctionsOrchestrator”. Then do it again for another function with the type Durable Functions activity (preview). The default name for that one is “Hello” which will work fine, but during the later examples I will be changing names and configuration to fit the context. Don’t worry though, I’ll provide links to my source code with examples later on.

The project should now have two more folders with example code that we can explore, named according to our two new functions, and we can just need to set one more value before we can run the function app to try it out.

In the file local.settings.json we need to set the value of AzureWebJobsStorage to either a connection string of a storage account in Azure that we want our app to store its state in, or to UseDevelopmentStorage=true to indicate that we will use the Azure Storage Emulator, which we in that case need to start before running the app.

If you opt to run the storage emulator locally, you can start it using the following line of code. You may have to modify it depending on where you installed it.

& 'C:\Program Files (x86)\Microsoft SDKs\Azure\Storage Emulator\AzureStorageEmulator.exe' start

Once we’ve set the storage connection we can start the app by pressing F5 in VS Code or by running the below command from the function directory.

func host start

This which will show us a nice overview of our three functions available, as well as an URL to trigger our client function to start our workflow.

DurableFunctionsHttpStart: [POST,GET] http://localhost:7071/api/orchestrators/{FunctionName}

DurableFunctionsOrchestrator: orchestrationTrigger

Hello: activityTrigger

We will also see when any orchestrations are triggered, and any output or logging that the function does will be displayed in our console too. We can either browse to the URL for a quick overview, or we can use PowerShell to invoke our new endpoint.

Invoke-RestMethod 'http://localhost:7071/api/orchestrators/DurableFunctionsOrchestrator'

The result we get contains an id for the running instance, and a few of endpoints that are examples of how to use the Durable Functions HTTP API.

{

"statusQueryGetUri": "http://localhost:7071/runtime/webhooks/durabletask/instances/56f21573-5da1-4edb-ad0e-f4d1ae19238b?taskHub=TestHubName&connection=Storage&code=<your-code>",

"terminatePostUri": "http://localhost:7071/runtime/webhooks/durabletask/instances/56f21573-5da1-4edb-ad0e-f4d1ae19238b/terminate?reason={text}&taskHub=TestHubName&connection=Storage&code=<your-code>",

"sendEventPostUri": "http://localhost:7071/runtime/webhooks/durabletask/instances/56f21573-5da1-4edb-ad0e-f4d1ae19238b/raiseEvent/{eventName}?taskHub=TestHubName&connection=Storage&code=<your-code>",

"id": "56f21573-5da1-4edb-ad0e-f4d1ae19238b",

"purgeHistoryDeleteUri": "http://localhost:7071/runtime/webhooks/durabletask/instances/56f21573-5da1-4edb-ad0e-f4d1ae19238b?taskHub=TestHubName&connection=Storage&code=<your-code>",

"rewindPostUri": "http://localhost:7071/runtime/webhooks/durabletask/instances/56f21573-5da1-4edb-ad0e-f4d1ae19238b/rewind?reason={text}&taskHub=TestHubName&connection=Storage&code=<your-code>"

}

What just happened is that we started our first orchestration instance, and through that we also created a task hub to keep track of our state, history, instances and more. Looking through the result of our request to the HTTP starter we can see that the endpoints correspond to available operations.

- Get status

- Terminate

- Trigger an event from an external source

- Purge the history

- Rewind the orchestration

All operations have some extra non-default options to them that we can learn more about by consulting the documentation. As of writing, the rewind functionality is in preview. It’s used to restart a failed orchestration instance by replaying the most recent failed operations.

To receive the status of our orchestration we can invoke the previously provided endpoint called statusQueryGetUri.

PipeHow:\Blog> Invoke-RestMethod 'http://localhost:7071/runtime/webhooks/durabletask/instances/56f21573-5da1-4edb-ad0e-f4d1ae19238b?taskHub=TestHubName&connection=Storage&code=<code>'

name : DurableFunctionsOrchestrator

instanceId : 56f21573-5da1-4edb-ad0e-f4d1ae19238b

runtimeStatus : Completed

input :

customStatus :

output : {Hello Tokyo!, Hello Seattle!, Hello London!}

createdTime : 2020-10-06 21:51:38

lastUpdatedTime : 2020-10-06 21:51:39

If the workflow was still running the status would indicate that, and we would have to check the status periodically for output. It was quick to run though, and we can see by the status that the workflow has completed, which also provides us with the output of our activity function calls as an array.

Exploring Commands

Before we go into more examples I’d like for us to take a deeper look at what commands are actually available to us inside the function, to learn more about our options in Durable Functions and see if there’s something that isn’t documented.

In the location where we installed our function core tools we can find the built-in modules that are accessible during runtime in our PowerShell worker. One of the modules is specifically built for managing the bindings and behind-the-scenes function work and holds the commands that we’re interested in. It can differ based on what you used to install the tools, but for me that module is found in the following directory:

C:\Program Files\Microsoft\Azure Functions Core Tools\workers\powershell\7\Modules\Microsoft.Azure.Functions.PowerShellWorker

Here we will find two files, the psm1 module file and the psd1 data file, or module manifest. Let’s have a look at the latter one, since it holds metadata about the module.

Import-PowerShellDataFile is a command we can use to safely read it, which results in a hashtable with information about the module.

Name Value

---- -----

VariablesToExport {}

Author Microsoft Corporation

FunctionsToExport {Start-NewOrchestration, New-OrchestrationCheckStatusResponse}

Description The module used in an Azure Functions environment for setting and retrieving Output Bindings.

CmdletsToExport {Push-OutputBinding, Get-OutputBinding, Trace-PipelineObject, Set-FunctionInvocationContext…}

PrivateData {PSData}

ModuleVersion 0.3.0

ScriptsToProcess {}

RequiredAssemblies {}

FormatsToProcess {}

AliasesToExport {}

GUID f0149ba6-bd6f-4dbd-afe5-2a95bd755d6c

TypesToProcess {}

CompanyName Microsoft Corporation

RequiredModules {}

NestedModules {Microsoft.Azure.Functions.PowerShellWorker.psm1, Microsoft.Azure.Functions.PowerShellWorker.dll}

PowerShellVersion 6.2

CompatiblePSEditions {Core}

Copyright (c) Microsoft Corporation. All rights reserved.

There are mainly two values we may be interested in, CmdletsToExport and NestedModules.

The first one shows us which commands that the module brings, which is what we were looking for.

Push-OutputBinding

Get-OutputBinding

Trace-PipelineObject

Set-FunctionInvocationContext

Start-DurableTimer

Invoke-ActivityFunction

Wait-ActivityFunction

Now we just need to know how they work, or at least what data they expect.

The second value is the NestedModules where we can see that the module actually includes two module files, the psm1 script file, and a compiled dll file. To save you the trouble of looking, all commands aren’t defined in the script file, so if we look around a bit we will find that the compiled file actually is in the parent folder of the modules directory, and has the rest of them.

What we were looking for was more information on the cmdlets available, which we can find by simply importing that compiled module file to see what’s in it.

PipeHow:\Blog> Import-Module "C:\Program Files\Microsoft\Azure Functions Core Tools\workers\powershell\7\Microsoft.Azure.Functions.PowerShellWorker.dll"

PipeHow:\Blog> Get-Command -Module Microsoft.Azure.Functions.PowerShellWorker

CommandType Name Version Source

----------- ---- ------- ------

Cmdlet Get-OutputBinding 3.0.452.0 gMicrosoft.Azure.Functions.PowerShellWorker

Cmdlet Invoke-ActivityFunction 3.0.452.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Push-OutputBinding 3.0.452.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Set-FunctionInvocationContext 3.0.452.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Start-DurableTimer 3.0.452.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Trace-PipelineObject 3.0.452.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Wait-ActivityFunction 3.0.452.0 Microsoft.Azure.Functions.PowerShellWorker

There’s not a lot of help documentation written for them, but we can at least get the syntax for three that we will use today.

Invoke-ActivityFunction -FunctionName <string> [-Input <Object>] [-NoWait] [<CommonParameters>]

Wait-ActivityFunction -Task <ActivityInvocationTask[]> [<CommonParameters>]

Start-DurableTimer -Duration <timespan> [<CommonParameters>]

If we’ve read the documentation we know about the first two from the examples.

Invoke-ActivityFunctionstarts an activity function, optionally async.Wait-ActivityFunctionawaits asynchronous activity functions for results.Start-DurableTimeris lacking documentatiuon, remember it for later!

We’ve now both run a basic Durable Function app, and we’ve looked through commands to know more about we have to work with. We’re free to do what we want with that knowledge, but it raises another question.

Where do we go from here?

Application Patterns

Azure Functions can aid us when building solutions where smaller parts, microservices, handle their own logic or integrations. This comes with many advantages such as code management, versioning and potential cost optimizations, but it can bring complexity too. It might be easier to just throw all the logic into one single file and run it on a machine somewhere, but the risk is that we instead eventually leave behind a monolithic monster script for someone else to manage.

The main purpose of Durable Functions is to assist us in coordinating and orchestrating workflows when building these serverless solutions, but as with all things it won’t always be the best solution for our needs.

Our goal of today is to get some understanding of when it can be, and to do that we will go through some defined application patterns where the strengths of Durable Functions are clear, with examples of each pattern. All the examples in this post can also be found on my GitHub.

The default app that the Azure Functions extension in VS Code generates is a good start, and we will use its client function as a base for our examples.

# Client Function - DurableFunctionsHttpStart

using namespace System.Net

param($Request, $TriggerMetadata)

$FunctionName = $Request.Params.FunctionName

$InstanceId = Start-NewOrchestration -FunctionName $FunctionName

Write-Host "Started orchestration with ID = '$InstanceId'"

$Response = New-OrchestrationCheckStatusResponse -Request $Request -InstanceId $InstanceId

Push-OutputBinding -Name Response -Value $Response

The client function is triggered by an HTTP request and simply starts the orchestration, as we saw during our earlier example. This function will mostly stay as it is between the different patterns, while the other functions will change.

If you decide to follow along, managing your instances may come in handy as you run the different examples. We can manage instances individually, or we can run the following command from the function core tools in the root of our project between tests to clear everything that’s been saved during experimentation by deleting the task hub completely for a fresh start.

func durable delete-task-hub

This will delete all task hubs and with it any saved state and run history, so if you’re interested in keeping that or exploring the storage, do so before running the command.

Documented Patterns

What I found when exploring Durable Functions is that the documentation is not quite up to date with the rapid development happening in the open source project on GitHub, so I will first go through three of the patterns, those that Microsoft has documented for PowerShell as of writing, and then discuss a few more.

During all examples I will display the code of the different function script files, but note that I’ve also edited the function names by renaming the folders, and the input binding names in each function.json to match the name of the parameter displayed in each activity function.

If you’re following along you will want to do so as well, and if you’re unsure of the changes you can always double check with the code on my GitHub.

Function Chaining

Function Chaining is one of the simpler patterns used and suits solutions where the sequence of the steps is important. It can be used where the application needs to work like a pipeline and each step expects data from or is dependent on the completion of a previous step.

Normal stateless Azure Functions can achieve this pattern (as well as some of the other patterns) by using other services as complements, for example Queue Storage or Service Bus, but implementing the workflow as a Durable Functions app makes it clear and readable using an orchestrator function, and reduces the steps needed to implement important features such as error handling.

Random FlipBits ToBinary ToBinary

<⚡> -> █ -> <⚡> -> █ -> <⚡> -> █ -> <⚡>

Pipeline = █

Function = <⚡>

For the example I’ve created a simple app that generates a random number, flips the bits in it and then outputs the original and flipped number as binary.

If your binary skills are rusty, don’t worry, it’s only for a simple demonstration of the pattern.

# Orchestrator Function - BinaryOrchestrator

param($Context)

$RandomNumber = Invoke-ActivityFunction -FunctionName 'Random'

$BitFlippedNumber = Invoke-ActivityFunction -FunctionName 'FlipBits' -Input $RandomNumber

# Output binary strings

Invoke-ActivityFunction -FunctionName 'ToBinary' -Input $RandomNumber

Invoke-ActivityFunction -FunctionName 'ToBinary' -Input $BitFlippedNumber

The entire workflow of our Durable Function app is described above as code in our orchestrator function. Note that it only has deterministic code, the random number is generated in an activity function to make sure that the orchestrator can replay properly.

Randomgenerates a random number.FlipBitsflips bit values, a binary value of 1010 would become (0)101.ToBinarygets and outputs the original random number in binary.ToBinarygets and outputs the flipped number in binary.

# Activity Function - Random

param($InputData)

Get-Random

Our first function simply generates and outputs a random number to be used further into our workflow.

# Activity Function - FlipBits

param([uint]$Number)

# Cast to unsigned number and flip bits

-bnot $Number

The second function uses the -bnot operator, which stands for “Bitwise NOT” and can be used to negate each bit in a number, for example a binary value of 101101 becomes (0)10010. Note that any leading zeroes will not be displayed.

As for the type uint, a normal integer in .NET is 32 bits in size, split between positive and negative numbers. By casting the input to an unsigned integer, we limit it to only being able to have positive numbers, twice as large. This is to make sure that the bit flip gets the correct effect of simply inversing the binary value. Understanding the details isn’t necessary for the example, but there are plenty of resources online to learn more about unsigned integers, bits and data types if you’re curious.

# Activity Function - ToBinary

param([uint]$Number)

[Convert]::ToString($Number, 2)

In our third function we convert the generated number to a binary value and represent it using a string. I’ve blogged about numeral systems before if you’re curious for how to convert between decimal, binary, hexadecimal and more.

Running the app and starting an instance like we did earlier will let us invoke the status endpoint, which once completed will give us an output that looks something like this.

# By adding spaces we can see that the bit flip worked

111000111100000111010000010001

11000111000011111000101111101110

All in all it’s a simple showcase of the Function Chaining pattern to show how to use the results from one activity to our next.

Something I’ve found that we need to keep in mind for functions and maybe especially for Durable Functions is that when we send data between them we need to be extra clear with which data type we expect and send. Sometimes converting it to JSON and back is a good way to make sure that we get it in the correct format, which is a technique also used by some internal integrations behind the scenes. Although it might not have been written with PowerShell in mind, some more information on the behavior of bindings can be found here.

Fan-out / Fan-in

If you thought that Durable Functions might save you some architectural challenges in building a solution using the Function Chaining pattern, you’ll realize quickly that it’s only getting better from there.

The Fan-out / Fan-in pattern is instead used where the order of operations isn’t important, for example when we want to do a lot of operations in parallel to save time. Doing so is to fan out and is traditionally done by starting asynchronous jobs in one way or another.

The problem is often to fan in. If you need to gather the results of the jobs or do some type of aggregation work, the orchestrator function can really shine.

Gathering the results of tasks that may have finished at different times is a challenge, and Durable Functions provides us with an easy way of doing so, with built-in reliability to account for errors or outages.

Bored

<⚡>

Types ⬈ ⬊

<⚡> -> █ -> <⚡> -> █

⬊ ⬈

<⚡>

Pipeline = █

Function = <⚡>

To demonstrate the pattern I’m going to call the bored API, an external API for finding things to do. The API lets us get an activity to do when we’re bored, but it doesn’t have any endpoint where we can get several activities to do. Since we can only get one at a time we’re going to create a simple orchestration to run parallel functions and quickly gather a varied list of things to do, maybe for the weekend.

# Orchestrator Function - BoredOrchestrator

param($Context)

$Types = Invoke-ActivityFunction -FunctionName 'Types'

$ParallelFunctions = foreach ($Type in $Types) {

Invoke-ActivityFunction -FunctionName 'Bored' -Input $Type -NoWait

}

Wait-ActivityFunction -Task $ParallelFunctions

The orchestrator function starts with calling an activity function to get a list of types of activities that we want to get from the API. We then loop through the types and trigger an activity function to get activities according to the types in the list, starting each activity function asynchronously with the -NoWait parameter and storing the function tasks in $ParallelFunctions.

Using Wait-ActivityFunction we then await and output the result of all of the functions. Building this workflow in Durable Functions also makes sure that if the Bored API would have problems, or if only one of the activity functions fail, the replaying of the orchestration does not run any of the completed functions again.

# Activity Function - Types

param($InputData)

$Types = 'education','cooking','relaxation','recreational','music'

1..10 | ForEach-Object {

$Types[(Get-Random -Minimum 0 -Maximum 4)]

}

The first activity function lists a few types supported by the API and generates a list of ten randomly chosen types from the list. By keeping this list separate from the orchestrator function we allow it to support random types each new orchestration, again without breaking the replay functionality of the workflow.

If we wanted to, we could also have generated this list in our client function and passed it to the orchestrator as input.

# Activity Function - Bored

param($Type)

$Result = Invoke-RestMethod "http://www.boredapi.com/api/activity?type=$Type"

"$Type - $($Result.activity)"

In our second activity function we call the Bored API to get an activity to do when we’re bored, based on the input type which is randomly generated once per orchestration but persists through replays.

Running the app and starting a new orchestration like we did before gives us ten activities, in the console where the app is running and as output if we ask the app for a status update using PowerShell when it’s done.

When I ran it I got a fairly varied list, though I’ve already completed the first one!

recreational - Start a blog for something you're passionate about

education - Learn how to make a website

relaxation - Draw and color a Mandala

cooking - Learn a new recipe

recreational - Play a video game

education - Learn to sew on a button

recreational - Explore a park you have never been to before

recreational - Solve a Rubik's cube

cooking - Make bread from scratch

recreational - Learn how to use a french press

Try running it several times for different results!

It’s a simple example, but you can clearly see the use cases for the pattern. Maybe you need to set a configuration for a lot of virtual machines, or process all the orders in the system for a daily report? Either way it’s really a joy to work with, compared to building your own entire application architecture to support the Fan-out / Fan-in pattern.

Async HTTP API

The third and last of what we could call the currently documented patterns is used to coordinate long-running operations with external clients.

Start DoWork

<⚡> --> █ --> <⚡>

⬊ ⬋

-> █ <-

⬈

<⚡>

GetStatus

Pipeline = █

Function = <⚡>

As an example, let’s say that we create a Durable Function workflow that deploys a large set of infrastructure in Azure, configures it, pushes some code to web apps and more. The bottom line is that it takes a long time to run, and we want to avoid letting the source that started our orchestration wait for a long time. We want to let the end client poll for status updates of our workflow instead of keeping them long enough for our app to respond.

Does it seem familiar? That’s because we’ve already used this pattern!

It’s actually already built into the Durable Functions workflow itself. Any response from the client function that starts the orchestration will automatically only be an id and a set of endpoints for our convenience, and we only get the actual output once it’s completed, by polling the status endpoint.

It makes sense if we go back to take a look at our client function that we generated earlier using the VS Code extension, since it only returns the status as below.

$Response = New-OrchestrationCheckStatusResponse -Request $Request -InstanceId $InstanceId

Push-OutputBinding -Name Response -Value $Response

We’ve already taken a look at the pattern through previous examples, but there’s always a lot more reading to do in the documentation.

Less Documented Patterns

We’ve gone through the only three patterns supported for PowerShell, at least if we are to believe the documentation. When we looked through the commands earlier available though, we found a command that hinted otherwise.

Monitor

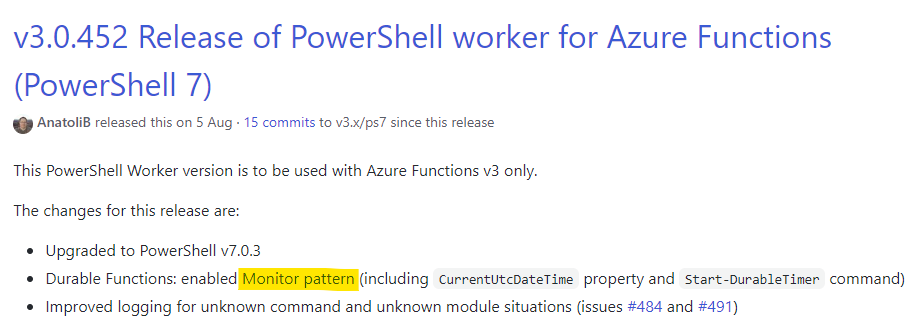

The command Start-DurableTimer isn’t mentioned anywhere in the documentation, but if we start looking elsewhere we can find some clues. Among the GitHub project releases of the Azure Function PowerShell worker, the one with the modules we explored a little earlier, we can see two releases that are interesting.

The first one is version 3.0.452, where the Monitor pattern is implemented.

Indeed, it seems we have more patterns!

The Monitor pattern in its simplest form is a recurring process in a workflow. A typical use case is to poll updates until a certain condition is met, for example we could tie two apps together nicely by using this pattern to monitor another Durable Function orchestration for completion.

In a way it’s a reversal of the previous pattern. Instead of letting an external client poll the status endpoint of our workflow until our long-running operation is done, we are the ones doing the polling of an external endpoint, potentially for a long time. This is done using timers, but since our orchestrator function code needs to be deterministic we use a special type of timer that supports the workflow with replays.

SnowMonitor

⬈ -> ⬊ Notify

↑ <⚡> ↓ -> <⚡>

⬉ <- ⬋

⇅

<⚡>

PollWeather

Function = <⚡>

Using the Start-DurableTimer and the MetaWeather API we can create a Durable Function workflow that checks daily until a weather forecast expects snow the next day, and do something when it does, such as sending an email. The weather API is free, easy to use and requires no authentication, so if you’re curious about how the code works you can look through the documentation of the API and try it too!

This will be the first time where we actually use the $Context input binding parameter in our orchestrator function, so let’s take a look at it before digging into the code.

# We can add this line to our function for details about the context

Get-Member -InputObject $Context

Using Get-Member is a quick way to let us see what the context can help us with. By adding it to our function temporarily we will see that there are two properties that we can make use of, CurrentUtcDateTime and Input.

Name MemberType Definition

---- ---------- ----------

Equals Method bool Equals(System.Object obj)

GetHashCode Method int GetHashCode()

GetType Method type GetType()

ToString Method string ToString()

CurrentUtcDateTime Property datetime CurrentUtcDateTime {get;}

Input Property System.Object Input {get;}

My guess is that the context will hold more features in the future, as it does in other languages supported in Durable Functions.

# Orchestrator Function - SnowMonitor

param($Context)

$Tomorrow = $Context.CurrentUtcDateTime.AddDays(1)

$ExpiryTime = $Context.Input.ExpiryTime

while ($Tomorrow -lt $ExpiryTime) {

$WeatherData = Invoke-ActivityFunction -FunctionName 'PollWeather' -Input $Tomorrow

if ($WeatherData.weather_state_name.Contains('Snow')) {

break

}

Start-DurableTimer -Duration (New-TimeSpan -Days 1)

}

if ($Tomorrow -lt $ExpiryTime) {

Invoke-ActivityFunction -FunctionName 'Notify'

}

The CurrentUtcDateTime property is a safe way for replay that we can use to get the current date directly in our orchestrator function, another feature implemented in the same release as this pattern. After getting tomorrow’s date using the context we also get the expiration time for our orchestration, an input we provide the workflow with that I will explain soon.

We then start a loop that runs until that defined time, in which we send tomorrow’s date to an activity function to get all weather forecasts. We check the results of the activity function for any snow forecasts, and break the loop if we find any, to take action.

Microsoft recommends us to avoid using infinite loops, but PowerShell does as of writing not have an implementation for their suggested workaround, which is why we send an expiration time as input for the function. We implement this by adding the -InputObject parameter when starting our workflow in our client function, the very first one we created.

To keep the functionality separated from the previous examples I will create a copy of the client function and add the following code to that one.

$OrchestratorInput = @{

'ExpiryTime' = Get-Date '2021-01-01'

}

$InstanceId = Start-NewOrchestration -FunctionName $FunctionName -InputObject $OrchestratorInput

If our workflow finds that there is a forecast for snow we break out of the loop and continue our program, otherwise we start a durable timer for one day which will set our orchestration to sleep until the time has passed. A durable timer can be set for to up to 7 days, and if you need longer delays than that you can simulate them by combining timers with loops.

If we find out that there’s snow the next day and break out of the loop, we trigger another activity function where we could for example send an email using the Microsoft Graph API or trigger an event in Azure as our final step, but this isn’t implemented in this simple example.

There are many services where you could notify someone about snow, and even more ways to integrate them into your Durable Functions workflow.

# Activity Function - PollWeather

param([datetime]$Date)

# https://www.metaweather.com/api/location/search/?query=Stockholm

# Stockholm's id is 906057, stored in Application Settings

$LocationId = $Env:LocationId

$APIDatePath = $Date.ToString("yyyy/MM/dd", [cultureinfo]::InvariantCulture)

Invoke-RestMethod "https://www.metaweather.com/api/location/$LocationId/$APIDatePath"

The first activity function takes a date as input, in this case the next day’s date, and gets the location id from the application settings of the function, retrieved in advance from the API.

This is set in the local.settings.json file if you’re running the app locally, and you can read more about the file here. The API is then invoked and any results are output back to the orchestrator function.

# Activity Function - Notify

param($InputData)

# Implement a notification mechanism here

"Notified!"

The second activity function is only here to provide us a base to build upon for when we need to notify an external source or an integrated system.

If I go ahead and start this workflow I would expect it to run for about a month, I’m thinking at least someone would have predicted an early snow by then.

Human Interaction

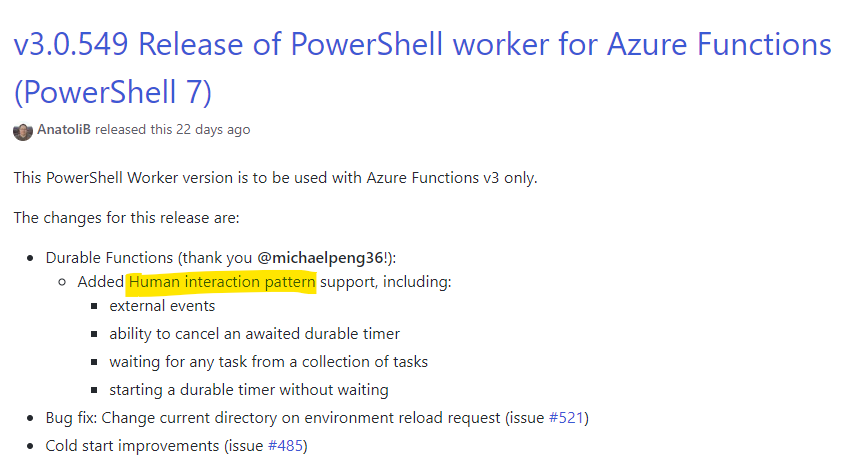

I mentioned that there were two releases of the PowerShell function worker on GitHub that caught my attention, the other one was version 3.0.549 which implemented support for the Human Interaction pattern.

Quite often in automation we encounter processes where someone has to make a decision. A manager has to approve someone being granted access to a system, or there’s a financial decision to be made. This can make our workflow come to a halt, or make it not even be possible to implement because of limitation in tools.

This pattern helps us solve the problem of including people in our processes, or as Microsoft would put the issue:

Involving humans in an automated process is tricky because people aren’t as highly available and as responsive as cloud services.

Which is not necessarily a bad thing, we just need to adapt our workflow a bit.

ProcessApproval

<⚡>

RequestApproval ⬈

<⚡> -> ⏱ ->

⬊

<⚡>

Escalate

Wait/Timeout = ⏱

Function = <⚡>

Similarly to the Monitor pattern we can utilize a durable timer, but to start a waiting period for an approval, during which our app will listen for an external approval event.

When writing this there’s a small condition that comes with this example if you want to follow along, which is that while support for this pattern in the PowerShell worker has been released as we saw above, it’s not actually implemented in the newest function core tools version yet. This may have changed since writing this, but until then it means that if you want to play along, you will need to clone the repository for the PowerShell functions worker and follow the instructions for how to build and run it on your machine.

Exploring the newest module version in the same way that we did earlier will show that there are some new commands, and Wait-ActivityFunction has had an alias added and been replaced with a new command called Wait-DurableTask.

CommandType Name Version Source

----------- ---- ------- ------

Alias Wait-ActivityFunction 0.3.0 Microsoft.Azure.Functions.PowerShellWorker

Function New-OrchestrationCheckStatusResponse 0.3.0 Microsoft.Azure.Functions.PowerShellWorker

Function Send-DurableExternalEvent 0.3.0 Microsoft.Azure.Functions.PowerShellWorker

Function Start-NewOrchestration 0.3.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Get-OutputBinding 3.0.0.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Invoke-ActivityFunction 3.0.0.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Push-OutputBinding 3.0.0.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Set-FunctionInvocationContext 3.0.0.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Start-DurableExternalEventListener 3.0.0.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Start-DurableTimer 3.0.0.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Stop-DurableTimerTask 3.0.0.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Trace-PipelineObject 3.0.0.0 Microsoft.Azure.Functions.PowerShellWorker

Cmdlet Wait-DurableTask 3.0.0.0 Microsoft.Azure.Functions.PowerShellWorker

There are a few commands added in this new version to support the Human Interaction pattern, let’s take a look at their syntax.

Send-DurableExternalEvent [-InstanceId] <string> [-EventName] <string> [[-EventData] <Object>] [-TaskHubName <string>] [-ConnectionName <string>] [<CommonParameters>]

Start-DurableExternalEventListener -EventName <string> [-NoWait] [<CommonParameters>]

Start-DurableTimer -Duration <timespan> [-NoWait] [<CommonParameters>]

Stop-DurableTimerTask -Task <DurableTimerTask> [<CommonParameters>]

Wait-DurableTask -Task <DurableTask[]> [-Any] [<CommonParameters>]

Send-DurableExternalEventcan be used to send an external event to another app, for example an approval. If we wanted to we could use it together with some sort of monitoring function to build a complex solution that sent automated approvals based on certain criteria, but we won’t use this command today.Start-DurableExternalEventListeneris what we will use to capture an external approval event, which we define with a name that the external client has to match when sending the event.Start-DurableTimerhas gotten a-NoWaitswitch to start it asynchronously, which is new if we compare it to the previous version that we explored earlier.Stop-DurableTimerTaskstops a durable timer started asynchronously, used in a similar way to how we capture the output of activity functions run in parallel by awaiting their results.Wait-DurableTaskis the command that replacedWait-ActivityFunction, which now also supports waiting for results from other tasks such as external events or timers. Note the switch parameter-Anywhich will be key to building our approval workflow.

Let’s use these new commands to build a concept approval app with a feature to escalate the approval in case we wait for too long!

We want to first trigger an approval request and wait for a response for a certain time. If there is no response we will escalate the matter, for example we could send it to the manager of the person set for approval depending on what the approval is about.

Any actual approval integrations will not be implemented in this example, like with the Monitor pattern we will simply output a simple message, but you can easily extend it to suit any needs for your systems.

For example you could send an email with a link that triggers another function with forced Azure AD authentication, that sends an approval event to this one and presents a simple feedback page for the user. You could also extend the example to have functionality to decline the approval.

# Orchestrator Function - ApprovalOrchestrator

param($Context)

$ApprovalTimeOut = New-TimeSpan -Days $Context.Input.TimeoutDays

Invoke-ActivityFunction -FunctionName "RequestApproval"

$DurableTimeoutEvent = Start-DurableTimer -Duration $ApprovalTimeOut -NoWait

$ApprovalEvent = Start-DurableExternalEventListener -EventName "ApprovalEvent" -NoWait

# The -Any parameter picks the first event to occur, instead of waiting for all of them

$FirstEvent = Wait-DurableTask -Task @($ApprovalEvent, $DurableTimeoutEvent) -Any

if ($ApprovalEvent -eq $FirstEvent) {

Stop-DurableTimerTask -Task $DurableTimeoutEvent

Invoke-ActivityFunction -FunctionName "ProcessApproval" -Input $ApprovalEvent

}

else {

Invoke-ActivityFunction -FunctionName "EscalateApproval"

}

Our orchestration starts with gathering input from the client function that starts the workflow in the same way as in the Monitor pattern, but in this case I’ve instead provided a number representing the amount of days that the function will run for without any response.

We trigger an approval request and then use the timeout value to start a durable timer asynchronously, which continues the script but lets us save the task in a variable that we can use to await the timer.

The main command for the Human Interaction pattern is the next part where we start an external event listener for an event named “ApprovalEvent”, also asynchronously. This lets us trigger the approval from an external source, either using the new command Send-DurableExternalEvent from another function app, or using a normal API call to our durable function in the same way as we did when we looked at the status of our completed first example.

Next we use Wait-DurableTask to listen for or await the first event to trigger among the two tasks that we’ve stored in variables. If the first event to wake our function from its sleep is the approval event, we trigger the activity function “ProcessApproval”, otherwise we trigger “EscalateApproval” since it would mean that our timer would have run out.

# Activity Function - RequestApproval

param($InputData)

# Implement a mechanism for requesting approval here

"Requested approval!"

# Activity Function - ProcessApproval

param($InputData)

# Implement a mechanism for processing approval here

"Processed approval!"

# Activity Function - EscalateApproval

param($InputData)

# Implement a mechanism for escalating approval here

"Escalated approval!"

The three activity functions simply output text for the sake of the example.

To finish up the example, let’s try running it. Press F5 or run the following command:

func host start

In a separate PowerShell session we will start a new orchestration by invoking the endpoint. For this example I created a new client function called “ApprovalStart” to pass the timeout days as input, which you can find together with the rest of the code on GitHub.

$Result = Invoke-RestMethod 'http://localhost:7071/api/ApprovalStart'

If you look at your console where you are running the app it should now have started and you should have the output “Requested approval!”, which means that the code is working.

We now need to raise the approval event for our function from the separate PowerShell session, which we can do with the HTTP API we looked at earlier, also provided as an endpoint in $Result. Make sure to replace the code parameter with your own.

Invoke-RestMethod "http://localhost:7071/runtime/webhooks/durabletask/instances/$($Result.id)/raiseEvent/ApprovalEvent?code=<code>" -Method Post -ContentType 'application/json'

Looking back to where the function is running we can now see “Processed approval!” which means that the workflow was successful.

Conclusion

Always dig a little deeper! Question why things aren’t working, explore! I wouldn’t have been able to write this post if I simply looked at the documentation and said “oh, okay”. When I looked at the commands available and tried to understand what they did, I figured that there had to be something more. That said, I really think that Microsoft’s documentation is a goldmine. Even if it’s not always up to date with PowerShell you can usually learn a thing or two by looking at the other supported languages.

Throughout the post I’ve linked to various sources of information where I learned about the subject and all my code from this project is on GitHub. If you want even more great code examples you can take a look at the PowerShell worker repository that I found very helpful in learning.

This post was a quite a bit longer than usual, but there is so much to explore when it comes to Durable Functions that I really couldn’t help it. I felt that the more I learned about the topic, the more there was to learn. Luckily it only means that we can better utilize it when it fits our needs, so I hope you got something to bring with you from this durable wall of text. If you still want more, why not take a look at what’s going on in the storage account that the function app is connected to where it saves state and run history?