Azure Blob Storage API with OAuth 2.0

I frequently find myself wanting to trim away dependencies that I’m not in control of. When things aren’t working, I want to ensure that I can troubleshoot all of the components in my solution.

Recently, a coworker of mine was facing some issues with an Azure Function mysteriously crashing without any error. It was running PowerShell, and after comparing hundreds of thousands of Azure Storage Blobs it ran out of memory, killing the function host entirely. The function took some time to run, so while my coworker solved the crashing issue with batching, I wanted to see if we could speed up the comparison by using the REST API to retrieve the blob information instead of the Az PowerShell module.

Today we will take a look at how we can use our Azure AD credentials with the Azure REST API through OAuth, to manage Storage Blobs from PowerShell.

To play along, you will need:

- An Azure Subscription

- PowerShell

- The Az module (optional, for creating the storage account)

- The AzAuth module (optional, for getting a token)

The Storage Account

If you don’t have a storage account lying around, start with creating one. You can do this in multiple ways:

- Using the Azure Portal

- With the Az PowerShell module

- With Azure CLI

- Through a Bicep or ARM template deployment.

- etc …

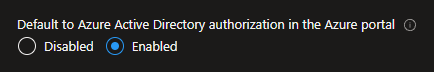

I will deploy the simple Bicep template below with a hard-coded storage account name as an example. Note that we’re setting defaultToOAuthAuthentication to true to better visualize the privileges that the API uses.

resource sa 'Microsoft.Storage/storageAccounts@2022-05-01' = { name: 'storageapi${uniqueString(resourceGroup().id)}' kind: 'StorageV2' location: resourceGroup().location sku: { name: 'Standard_LRS' } properties: { supportsHttpsTrafficOnly: true defaultToOAuthAuthentication: true }}

output storageName string = sa.name

If you want to use the Az module to create a new resource group and deploy the template to it, you can connect to your tenant with Connect-AzAccount and then run these two commands.

New-AzResourceGroup -Name 'storageapi-rg' -Location 'westeurope'

New-AzResourceGroupDeployment -ResourceGroupName 'storageapi-rg' -TemplateFile 'storage.bicep'

Authentication

There are various ways we can authenticate to a storage account in Azure, such as

Storage Account Keys,Connection Strings,- or

Shared Access Signature (SAS) URIs,

but sometimes we don’t want a secret string (read password) lying around. If I’m running it myself and don’t mind the interactive authentication, OAuth is hard to beat in terms of security. So that’s what we’re using today!

Azure AD Authentication

As we saw in the template I deployed earlier, I set the property defaultToOAuthAuthentication to true. This makes the Azure Portal experience the same as when exploring the API in terms of access. If the storage account was not created with this setting, you can go to the configuration section and enable it.

Don’t do this on an account that other people are using, since it will break the browsing experience if you don’t have the correct roles!

To use the API with Azure AD authentication and OAuth, we need to give ourselves one of the roles that Microsoft list as built-in data plane roles:

Storage Blob Data Owner: Use to set ownership and manage POSIX access control for Azure Data Lake Storage Gen2. For more information, see Access control in Azure Data Lake Storage Gen2.Storage Blob Data Contributor: Use to grant read/write/delete permissions to Blob storage resources.Storage Blob Data Reader: Use to grant read-only permissions to Blob storage resources.

There are different roles for working with other Azure Storage services such as Tables or Files.

How to Borrow a Book

There are a lot of parts that go into the retrieval of a token for the API, so I’d like to make a parallel to borrowing a book at a library.

When going to borrow a book, we need a few pieces of information. First of all, we need to know which library we’re going to. If there’s no one to ask inside, we can use the genre of the book to find the correct section. Once we find the book and want to borrow it, we make an agreement with the library specifying the terms for borrowing the book, and the duration.

Maybe the agreement says read access only? 😉

Similarly, when we’re getting a token for Azure with the OAuth flow, we need to specify a tenant that the token will be valid for. Then we need to specify a resource for which to get the token, which roughly translates to which API we want to use, such as the Azure Storage services or Graph API. Within the resource we can need to specify scopes to set the privileges that the token can enable if our account has access to them. The token is also given a duration, a timestamp when it will expire and no longer work.

| Library 📚 | Azure Auth 🔑 |

|---|---|

| Library | Tenant |

| Section | Resource |

| Agreement Terms | Scopes |

| Duration | Duration |

The good thing is that we don’t have to pay a fee if we’re late with returning our token!

Retrieving a Token

Now that we know which information we need, let’s get a token. I’m going to use the module AzAuth which I wrote to have a simple way of getting a token for a specific scope, but the Az module or the Azure CLI works as well.

In our case we will use the Blob endpoint for the storage account as the resource to get the token for, since it’s the host of the API. It’s assembled as below, but can also be found under the endpoints section in the Azure Portal. Set $StorageAccountName to the name of your account if you want to run the code below, as well as $TenantId to the id of the tenant that the storage account is in.

# Get-AzToken from the AzAuth module$Uri = "https://$StorageAccountName.blob.core.windows.net"$Token = Get-AzToken -Resource $Uri -TenantId $TenantId -Scopes '.default' -Interactive

Running the code will open a browser for an interactive login, and $Token will contain the resulting token with some metadata, such as resource, scopes and when it expires.

The Blob Storage API

You could say that there are two parts of the Azure Blob Storage API, one for the storage account and control plane, and one for the blobs within, the data plane.

The goal today is to list all containers, all blobs within, and download each of them.

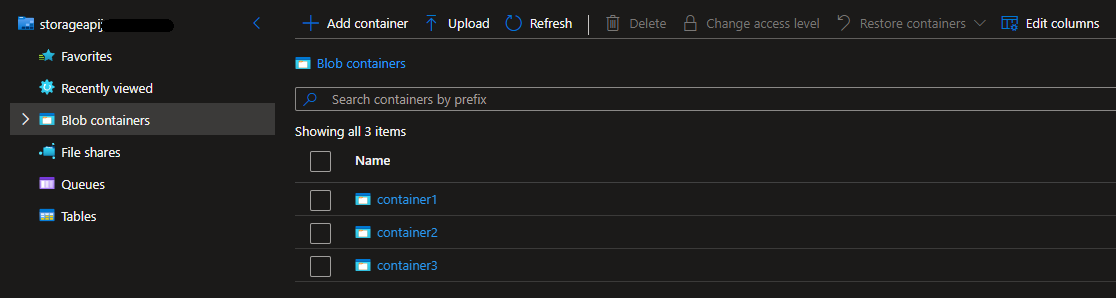

Before we do anything else, I’m going to upload some data to the storage account. I’m creating three containers and uploading a few random files to each of them.

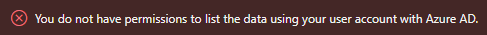

If we enabled the AAD experience in the portal earlier, but haven’t yet added ourselves to a role, clicking the containers to upload our files will show a clear difference between the control and data plane in Azure.

I’m giving myself the Storage Blob Data Contributor role to first be able to add some files, and then read them using the API. If you’re using the Azure Portal to do this, you may have to log out and in again for the access to work.

We also need to get a new token. I’m leaving out the scope parameter this time, which will give me the .default scope, not limiting my access.

$Token = Get-AzToken -Resource $Uri -TenantId $TenantId -Interactive

Tip: If you skip the -Interactive switch, the module will try to find tokens already cached in your environment from other sources.

Headers

When calling the REST API for the Azure Storage services, we need to specify at least two things as headers for our API requests. Our token for authorization, and the version of the API.

The Azure Storage API is versioned by dates, and we need to specify a version of at least 2017-11-09 to have OAuth support.

$Headers = @{

'Authorization' = "Bearer $Token"

'x-ms-version' = '2021-08-06' # Current version as of writing

}

Listing Containers

By sending a request with our headers, we can now list all containers in our storage account using the Blob endpoint and an extra parameter.

Invoke-RestMethod -Headers $Headers -Uri "$Uri/?comp=list"

Looking at the result, it looks mostly right. Mostly, because by the first symbols we can see that there is something wrong with the encoding.

<?xml version="1.0" encoding="utf-8"?>

<EnumerationResults ServiceEndpoint="https://storageapi12345.blob.core.windows.net/">

<Containers>

<Container>

<Name>container1</Name>

<Properties>

<Last-Modified>Fri, 28 Oct 2022 12:25:46 GMT</Last-Modified>

<Etag>"0x8DAB8DF8A2E46AD"</Etag>

<LeaseStatus>unlocked</LeaseStatus>

<LeaseState>available</LeaseState>

<DefaultEncryptionScope>$account-encryption-key</DefaultEncryptionScope>

<DenyEncryptionScopeOverride>false</DenyEncryptionScopeOverride>

<HasImmutabilityPolicy>false</HasImmutabilityPolicy>

<HasLegalHold>false</HasLegalHold>

<ImmutableStorageWithVersioningEnabled>false</ImmutableStorageWithVersioningEnabled>

</Properties>

</Container>

<Container>

<Name>container2</Name>

<Properties>

...

</Properties>

</Container>

<Container>

<Name>container3</Name>

<Properties>

...

</Properties>

</Container>

</Containers>

<NextMarker />

</EnumerationResults>

This issue stems from how PowerShell decodes or assumes the content-type of the response. There is an issue related to the matter on GitHub.

My solution is to use the underlying .NET APIs instead. We’ll create a small function to use for the rest of the post, since the problem is the same for other endpoints. I intentionally left out stuff like error handling and validation to keep it more readable.

Another solution would have been to output the response to a file and read from the file to avoid PowerShell misinterpreting the response data.

If you’re using something else than PowerShell for the requests, you may find that it’s all working as expected and that you don’t need the code below.

function Invoke-AzStorageWebRequest { param ( $Uri, $Token )

# Create WebRequest object $Request = [System.Net.WebRequest]::Create($Uri)

$Request.Headers.Add('Authorization', "Bearer $Token") $Request.Headers.Add('x-ms-version', '2021-08-06')

# Execute request, save response $Response = $Request.GetResponse() # Read response with a streamreader $ResponseStream = $Response.GetResponseStream() $StreamReader = [System.IO.StreamReader]::new($ResponseStream)

# Output the data $StreamReader.ReadToEnd()}

We can then use the new function to run the command “again”.

Invoke-AzStorageWebRequest -Uri "$Uri/?comp=list" -Token $Token

Which yields a much better result, without unwanted BOM encoding symbols!

<?xml version="1.0" encoding="utf-8"?>

<EnumerationResults ServiceEndpoint="https://storageapi12345.blob.core.windows.net/">

<Containers>

...

</Containers>

<NextMarker />

</EnumerationResults>

Since the result is XML, we can also parse it easily and access the data like we would with any property.

([xml](Invoke-AzStorageWebRequest -Uri "$Uri/?comp=list" -Token $Token)).EnumerationResults.Containers.Container.Name

This lists only the names of the containers, which is what we need to find all blobs within them.

container1

container2

container3

Listing Blobs

Using the same function, we can also list the blobs inside a container by specifying the container name and changing the URL slightly according to the Storage API documentation.

Invoke-AzStorageWebRequest -Uri "$Uri/container1?restype=container&comp=list" -Token $Token

The response is very similar, but instead contains metadata for each blob.

<?xml version="1.0" encoding="utf-8"?>

<EnumerationResults ServiceEndpoint="https://storageapi12345.blob.core.windows.net/" ContainerName="container1">

<Blobs>

<Blob>

<Name>example.svg</Name>

<Properties>

<Creation-Time>Fri, 28 Oct 2022 12:47:41 GMT</Creation-Time>

<Last-Modified>Fri, 28 Oct 2022 12:47:41 GMT</Last-Modified>

<Etag>0x8DAB8E29A0FD01E</Etag>

<Content-Length>1358</Content-Length>

<Content-Type>image/svg+xml</Content-Type>

<Content-Encoding />

<Content-Language />

<Content-CRC64 />

<Content-MD5>WkY4pM492Gf1G6UWSgD7lw==</Content-MD5>

<Cache-Control />

<Content-Disposition />

<BlobType>BlockBlob</BlobType>

<AccessTier>Hot</AccessTier>

<AccessTierInferred>true</AccessTierInferred>

<LeaseStatus>unlocked</LeaseStatus>

<LeaseState>available</LeaseState>

<ServerEncrypted>true</ServerEncrypted>

</Properties>

<OrMetadata />

</Blob>

<Blob>

<Name>storage.bicep</Name>

<Properties>

...

</Properties>

<OrMetadata />

</Blob>

<Blob>

<Name>textfile.txt</Name>

<Properties>

...

</Properties>

<OrMetadata />

</Blob>

</Blobs>

<NextMarker />

</EnumerationResults>

We can see that I uploaded three files earlier, and we can see some metadata about them.

Downloading Blobs

The final piece is to download the blobs we’ve found. This is almost the same request as before, but we remove the URL parameters and append the name of the blob.

If your file name contains symbols or special characters, you may be required to URL encode the file name so that the API reads it correctly.

As an example, we can try getting the content of our previously deployed Bicep template that I uploaded as one of the files.

Invoke-AzStorageWebRequest -Uri "$Uri/container1/storage.bicep" -Token $Token

The result is the content of the file as a string.

resource sa 'Microsoft.Storage/storageAccounts@2022-05-01' = {

name: 'storageapi${uniqueString(resourceGroup().id)}'

kind: 'StorageV2'

location: resourceGroup().location

sku: {

name: 'Standard_LRS'

}

properties: {

supportsHttpsTrafficOnly: true

defaultToOAuthAuthentication: true

}

}

output storageName string = sa.name

Save-AzStorageBlobs

To conclude our today’s API exploration, I’ve compiled what we’re learned so far into a small script that downloads all blobs from all containers in a specified storage account.

If you decide to run the script, consider the amount of data within the account before you proceed.

# Set tenant id and storage name$TenantId = '<tenant-id>'$StorageAccountName = '<storage-account-name>'

# Assemble blob endpoint uri for the API requests$Uri = "https://$StorageAccountName.blob.core.windows.net"

# Set up function to use for API calls# This is to avoid encoding issues with Invoke-RestMethod/WebRequestfunction Invoke-AzStorageWebRequest { param ( $Uri, $Token )

# Create WebRequest object $Request = [System.Net.WebRequest]::Create($Uri)

$Request.Headers.Add('Authorization', "Bearer $Token") $Request.Headers.Add('x-ms-version', '2021-08-06')

# Execute request, save response $Response = $Request.GetResponse() # Read response with a streamreader $ResponseStream = $Response.GetResponseStream() $StreamReader = [System.IO.StreamReader]::new($ResponseStream)

# Output the data $StreamReader.ReadToEnd()}

# Requires AzAuth module# Make sure that you have a data role for blobs# https://learn.microsoft.com/en-us/azure/storage/blobs/authorize-access-azure-active-directory#azure-built-in-roles-for-blobs$Token = Get-AzToken -Resource $Uri -TenantId $TenantId -Interactive

# Get container names by casting result to XML and picking out only the container names$ContainerNames = ([xml](Invoke-AzStorageWebRequest -Uri "$Uri/?comp=list" -Token $Token)).EnumerationResults.Containers.Container.Name

# Loop through all container names, get blob names and download blobs to filesforeach ($Container in $ContainerNames) { $BlobNames = ([xml](Invoke-AzStorageWebRequest -Uri "$Uri/$($Container)?restype=container&comp=list" -Token $Token)).EnumerationResults.Blobs.Blob.Name

foreach ($Blob in $BlobNames) { Invoke-AzStorageWebRequest -Uri "$Uri/$Container/$Blob" -Token $Token | Out-File $Blob -Encoding utf8 }}

Conclusion

I really like that more and more services in Azure are getting Azure AD Authentication as an option! It makes it much easier to build clean solutions that don’t store any secrets that risk ending up in the wrong hands. The final code from this post can be found on my GitHub.

Special thanks to my coworker Anders who gave me a great opportunity to explore the API!